Tales From the Incident Response Cliff Face – Case Study 3

Tales From the Incident Response Cliff Face – Case Study 3

In this installment of Tales from the Incident Response Cliff Face, we recount a ransomware attack against a European product manufacturing and distribution company.

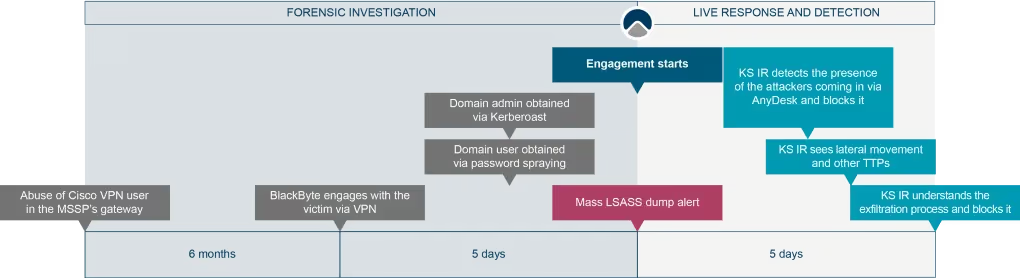

This particular ransomware attack is interesting for several reasons, including the fact that it was carried out with assisted initial access and the threat mitigation was in real time, i.e. as the attack was taking place.

In this report, I’ll cover how the team swiftly counteracted the ongoing threats, navigating compromised systems and evading attackers.

I’ll also dissect the ransomware’s kill chain, from initial access to privilege escalation and lateral movement as well as how we secured the environment.

Like all of the Cliff Face reports, this tale highlights the need for robust security measures and rapid incident response, which I detail at the end.

.

ARCHEOLOGY VS ADRENALINE

Preamble

Our team frequently helps companies handle ransomware incidents, providing support that includes investigation into an attackers’ initial access vector and any persistence mechanisms used. We usually follow up with a heatmap of the attacker activities to unravel the kill chain in as much detail as we can gain from the compromised or restored environment. On occasion, and if required, we also engage with threat actors in negotiations.

But the common denominator in most ransomware attacks is that by the time it is investigated, it’s essentially a cold case. The attack has already unfolded, criminals have encrypted the environment and exfiltrated company data and, in many cases, the organization has received bad publicity and suffered some financial damage. The victim will have had to restore operations and harden security, but also, deal with stakeholder, customer and regulator notifications, potential legal repercussions, and the question of whether to pay ransom or not.

But what if organizations could avoid the calamity of dealing with the aftermath of a ransomware attack, including preventing downtime to operations?

In this edition of the Incident Response Cliff Face, I detail an experience where I helped a client do just this – dissecting the kill chain technicalities of the ransomware operators who were trying to exfiltrate data, even ‘running into’ the attackers in real time inside the network as I was conducting the analysis.

Beyond this, I also highlight what made the victim particularly vulnerable and share key takeaways from our efforts, including what businesses can do to protect themselves in the future.

WATSON, WE HAVE A PROBLEM

The victim was one of the largest product manufacturing and distribution companies in Europe, with billions of dollars in annual revenue. It had outsourced its operational security, which included monitoring and EDR agent deployment, to a third-party managed security services provider (MSSP).

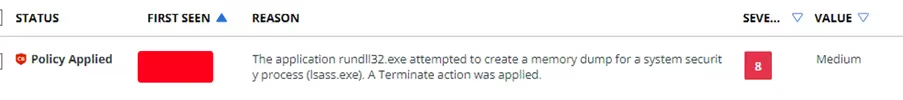

We were called to investigate the following alert, which had spawned from many devices at once (something no-one ever wants to wake up to):

Figure 1. Dumping LSASS with comsvc.dll

This is a documented method for dumping the Local Security Authority Subsystem Service (LSASS) process, so it can be manipulated offline and eliminate the need to use tools like mimikatz.

It is still an off-the-shelf technique, so I asked the client about the action only being raised (versus being blocked) and whether they had some custom settings on their EDR solution that might be behind this.

It was at this point I learned that the company was in the middle of migrating their fleet to another EDR solution, which until migration was complete, would be in audit mode only.

So, essentially, it could see but it could not take action.

This meant that the fleet we were dealing with was quite heterogenous: We would need to be mindful of both EDR consoles when checking for events as well as the usual blind spots that emerge when machines are waiting for the EDR to be installed.

A FORGOTTEN WEAK BACKDOOR

That said, we still had a logical next point of inquiry. The data—as well as years of experience—tell us that compromised credentials for publicly exposed services (like VPN and Remote Desktop Protocol (RDP)), are by far the most common entry points for ransomware incidents.

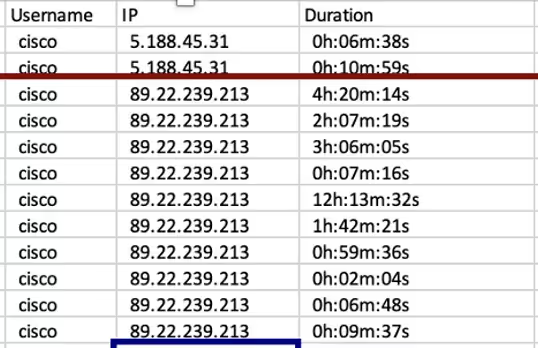

So, we asked the company about MFA enforcement on their VPN gateway and were told that they had implemented this control. However, unbeknownst to our client, their MSSP was accessing their environments via their very own VPN gateway where MFA was not being enforced. A log analysis of this ‘unknown’ gateway revealed that the attackers had been taking advantage of it for months via the local user cisco and that in the week prior to the LSASS alert, significant activity by BlackByte gang was also taking place.

CLIMBING THE (PRIVILEGE) LADDER

The LSASS dump alert was generated by user accounts that, unsurprisingly, were Domain Administrators. Further investigation revealed how the attacker ‘doubled’ their privilege escalation:

The VPN user cisco used the IP address 89.22.239.213 (see Figure 2) which—as the company confirmed—had a weak password. However, as this local VPN user had LAN access only, rights were limited; they could talk over the network but could not have access to the Windows domain.

Step 1 – Getting Window Domain Access

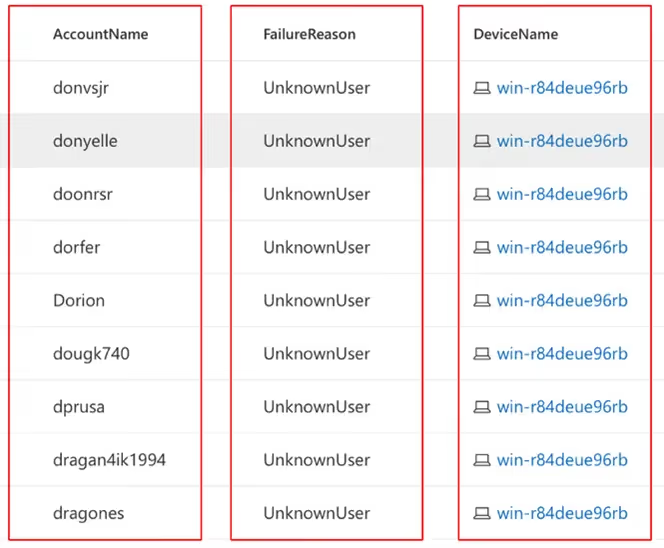

Investigation into the authentication events that happened shortly after, revealed that the attacker doubled down on their efforts to find a user account that would grant access to the Windows domain. They obtained this via password spraying attack (Figure 3).

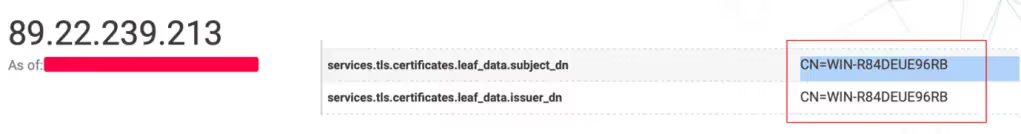

We were able to prove that these different malicious activities were associated to the same attacker by looking at the TLS certificate in Censys (Figure 4), which revealed that the RDP was configured with TLS, thus indicating a positive match between host and IP.

After obtaining a valid username, the attacker then successfully brute forced the password.

The account in question belonged to a partner from another company who needed temporary access to the target organization’s resources. The organization had created a domain account and expected them to change the default password, which never happened, creating a vulnerability that was present for years.

Step 2 – Getting Domain Administrator Privileges

The LSASS dumping alert was not the only alert in the EDR console. Multiple alerts had been missed and I combed through days’ worth of data to find the ones that were relevant to this incident. Unfortunately, these alerts were not processed quickly enough by the third-party MSSP (possibly a result of the analysts having to monitor two separate dashboards until the EDR migration was complete), which allowed the attackers to roam freely.

The key question of how final privilege escalation happened was answered by one of the alerts I found in the new EDR console:

After obtaining the domain user [obscured by the red boxes above in Figure 5, we can see that the malicious actor went after the weak domain admin credentials.

The alert showcases a typical Kerberoast attack.

And it would have been caught by the MSSP—especially as it was not that subtle—if they had picked up key indicators.

- The first indicator was the LDAP query with the wildcard on the service principal name (SPN). The EDR had generated an alert on this (see the ‘search filter’ in Figure 5 above), which they didn’t pick up on.

- A second indicator would have been available to them if they had leveraged windows event logs (particularly some events with EventID 4769 on the domain controllers). But alas, the audit policy was not set up to collect them.

- A third indicator would also have been available to them if they had implemented honeypot accounts, which would generate high fidelity alerts. But again, the MSSP had unfortunately not done this.

All this to say that the attackers didn’t think twice when launching this high-risk high-reward attack because they correctly assumed that proper defenses were not implemented.

Shortly after the alert had been issued, we saw evidence that the attackers were using some Kerberoastable domain admin accounts with cleartext passwords, suggesting that the Kerberoast attack had been successful (See Figure 6). It goes without saying that, had the company implemented strong passwords, the attack would not have reached its objective.

So, with this being achieved, privilege escalation was complete.

It is worth mentioning that the attackers moved from nobodies to domain admins in exactly three passwords. They guessed the VPN user, then the domain user finaly that of the domain admin via kerberoast without using any malware in the environment; the attackers performed all these actions from the comfort of their own machines. The fact that they used unmanaged assets to do this is a reminder that security tooling investments must be complemented with proper hygiene around securing accounts, particularly the privileged ones.

LATERAL MOVEMENT TO GET MORE

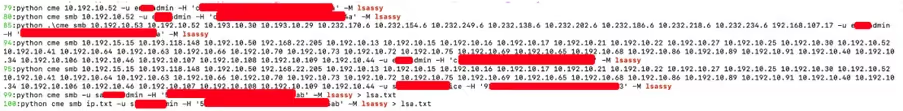

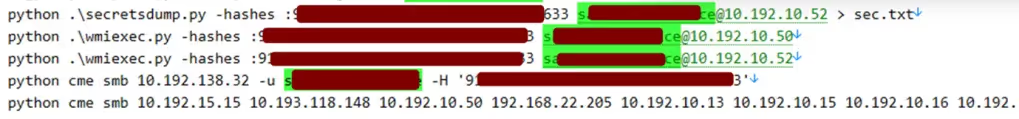

Our investigation showed that—as with most threat actors—these attackers did not stop at obtaining domain admin privileges; they wanted to go deeper to access more accounts and identify more targets (Figures 6 and 7).

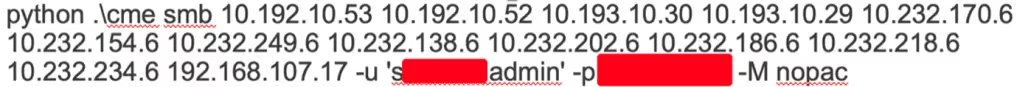

In this case, the attackers selected a couple of hosts where they could make themselves comfortable. They set up shop by installing python and preparing the tools they would need, which included Impacket Suite.

Specifically, we see that the attackers probed for the vulnerability CVE-2021-42278/ CVE-2021-42287 Domain Controllers (Figure 6). We also see that the threat actors executed the Isassy module from crackmapexec remotely on the machines, to comprise multiple privileged access accounts (Figure 7)— the action that triggered the LSASS dumping alert in the first place (Figure 1).

We also saw evidence of an attempt to dump the NTDS.dit, too (see Figure 8). But they met a dead end. So, they executed a lateral movement using the RDP as well as other common tools, such as the Impacket Suite, popular with penetration testers.

WHAT THE THREAT ACTORS DID NEXT: GADGETS

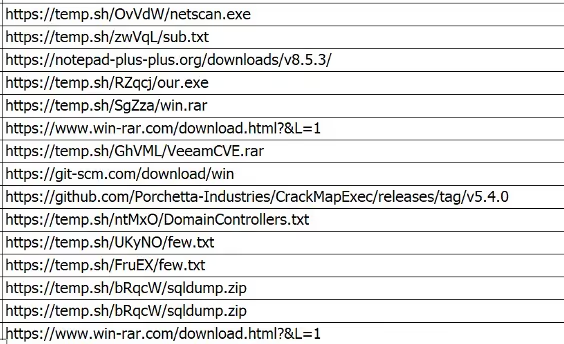

You may have noticed that the threat actors used common off-the-shelf offensive tooling. In fact, they downloaded some of them from temp.sh (see Figure 9) and stored them openly for ease of access.

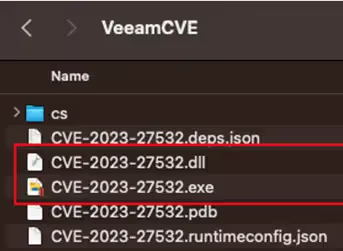

One tool in particular appears to target CVE-2023-27532 (see Figure 10). This would enable the attacker to obtain encrypted credentials stored in the virtual machine, or VEEAM, configuration database.

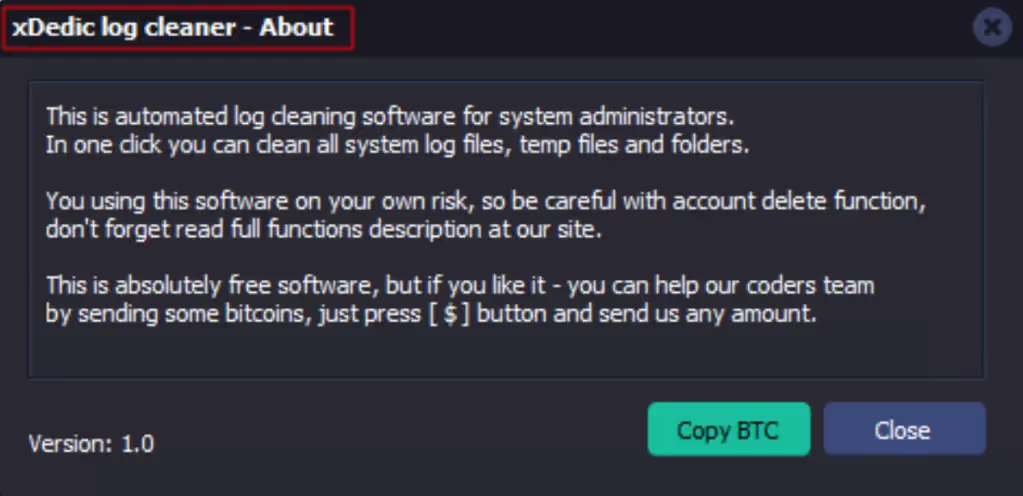

Another off-the-shelf tool to clean system logs among other things, was also activated (see Figure 11).

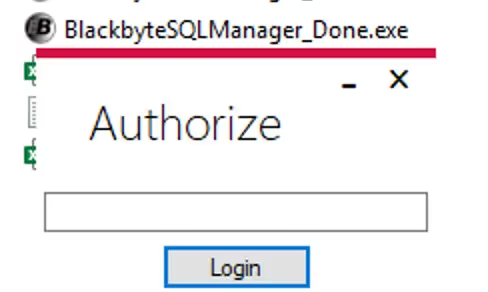

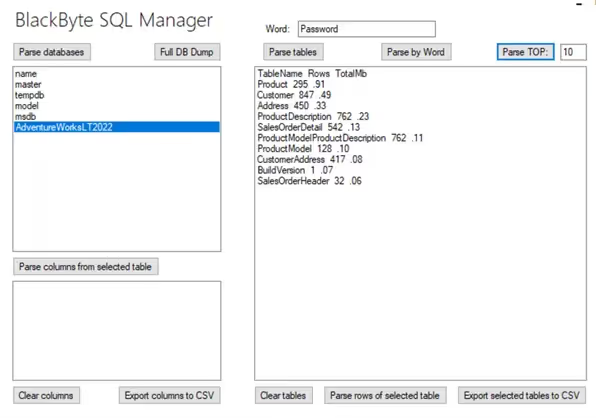

Finally, the most interesting tool I saw was one that assisted the threat actors to prepare data for exfiltration (see Figure 12). By using the BlackByteSQLManager, the attackers would get visibility into the size and potential value of the data. This would then enable them to prioritize which data they should extract through keyword lookups with a view to then identifying what could be best leveraged in a ransom request.

An Insight Into How The Threat Actor Selected Their Data

Up to this point, I have been explaining how the threat actor has learned about their victim. The fact that we interrupted them halfway through their operation gave us access to the tool. Which in turn revealed their modus operandi as well as their name.

It was safe to assume that we were dealing with the BlackByte ransomware gang (see name at top of Figure 12). Further, with a little effort, I was able to establish what the password to this tool actually was.

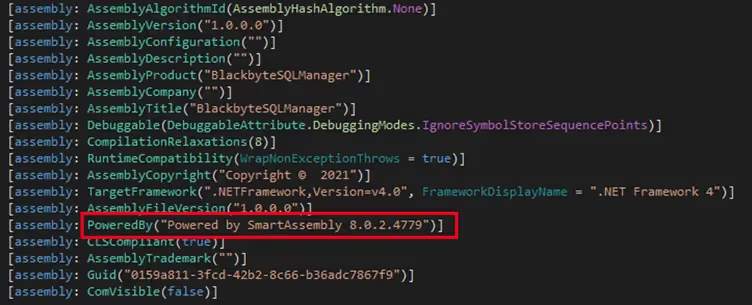

The tool is a .Net assembly, which appears to have been obfuscated with SmartAssembly (see Figure 13).

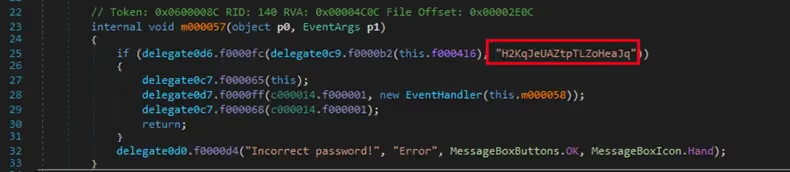

I used the Simple Assembly Explorer to successfully de-obfuscate the payload and found the authentication method and consequently the password (See Figure 14).

Following the password discovery, I wanted to test the tool. I set up a dummy database, which would allow me to play with it (see Figure 15).

This way, I could list all SQL server databases (DBs) locally and sort tables by size to identify the more damaging data. At first, I thought it would scan and list all DBs in a network, but the localhost was hardcoded and there was no networking functionality, so it was clear that the tool needed to run on each data server. I discovered that the tool also enabled the threat actors to filter the data by interesting keywords, such as credit card and passwords and then export it to csv.

DATA EXFILTRATION

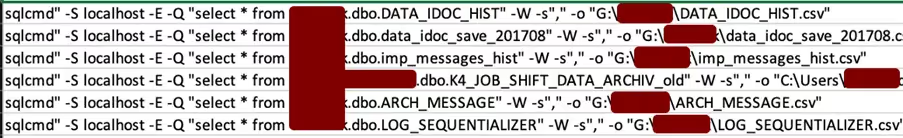

I observed the threat actor using the BlackByteSQLManager tool on some servers with (fortunately) non-sensitive data. As you would expect, the export functionality was as follows:

The sqlcmd utility targeted the local SQL Server instance and ‘Windows Authentication’ made the operation non interactive and eliminated the need for further user credentials (thanks to Domain Administrator privileges).

The data was then written into CSV files (See Figure 16).

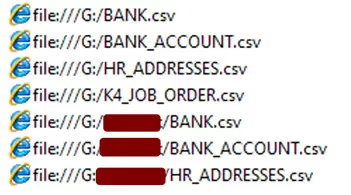

We then observed the attacker manually inspecting some tables with file names that could indicate they contained sensitive information, e.g. ‘Bank Accounts’ and ‘Human Resource Data’. As it turned out, these tables did not contain much information (See Figure 17).

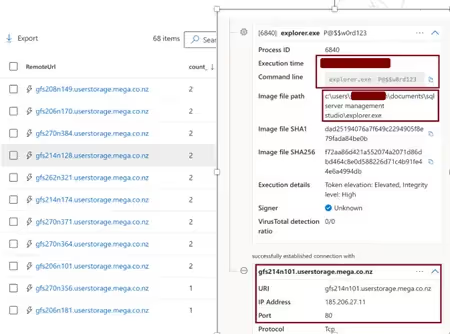

Another important observation we made was the attacker’s use of an unknown explorer.exe tool, which seemed to interact with the storage platform mega.co.nz. Though we could not get our hands on the sample to prove its data exfiltration functionality, it was a safe assumption to make that this was indeed the tool used to push the csv files to the mega storage platform (See Figure 18). Read Microsoft’s analysis on this particular aspect, here.

THEY ARE STILL HERE…

At this point in our investigation we had identified the entry vector, the compromised accounts and the lateral movement methods.

But crucially, we had also confirmed that sensitive data had not yet been exfiltrated.

All the tools and skills showcased so far pointed heavily towards a ransomware operation where encryption was imminent, so we had to act swiftly to try to cut the attackers off at the pass. To do it decisively and comprehensively, we needed to find all their persistence mechanisms.

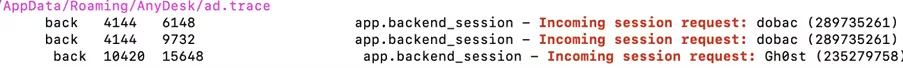

The first sign of persistence we found was AnyDesk. This was installed as a service on some selected servers. Upon inspecting the logs, we confirmed that in addition to the VPN access, which they were already exploiting, the attackers were coming in via Anydesk (See Figure 19).

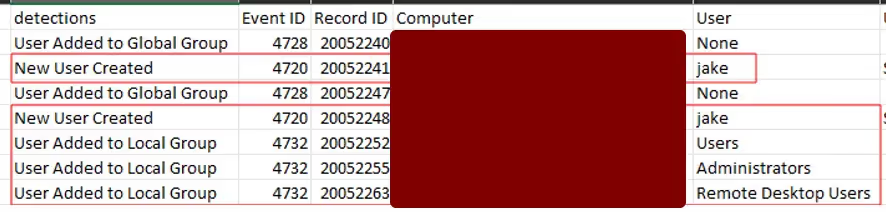

The second sign of persistence was the creation of local admin accounts, which were used over RDP (See Figure 20).

When the Incident Response team tried to eliminate the persistence secured by AnyDesk, we ran into a few issues:

- The difficulty of ascertaining which AnyDesk program should be cleaned up. This is because the organization was also using Anydesk as part of their IT processes.

- The lack of EDR on part of the fleet. This obviously complicated the cleanup.

- The challenge of remediating while the attacker was still active. The AnyDesk logs showed the attackers were actually in the system at the same time we were investigating.

The reason the attackers were still active was because the in-house security team had not yet articulated their response policies (which would have led to the swift removal of the threat attacker).

The general state of unpreparedness for an attack meant that we were forced to make suboptimal calls. Our choice was as follows:

- Option 1 (the safe approach): Trigger a lockdown and isolate the company from the Internet until we finished the investigation. This would cut off attacker access and allow thorough remediation—but at the cost of the business.

- Option 2 (the riskier approach): Attempt to surgically contain the attackers while the company was still connected to the Internet.

Considering the tactics and techniques that we had identified and our assessment that the attackers were not extremely advanced, we decided that Option 2 was the preferable course of action:

We carried out the following:

- Full migration and deployment of the EDR solution across all networks, as visibility is key.

- Take down of the obsolete VPN gateway.

- Resetting/disabling of compromised privileged accounts.

- Identification at scale of the malicious AnyDesk installations that deviated from the compliant one (based on paths, hashes, and versions) and their automated removal.

- Setting up of custom alerts, based on the observed attacker patterns (e.g., alert on the SQL manager tool usage, alert on the *.mega.co.nz domain) and monitoring of malicious local admin accounts usage.

- Blocking of *.mega.co.nz and restriction of outgoing traffic from servers that did not need to connect to the Internet.

This short list coupled with intensive monitoring enabled us to keep the business operational while kicking out the attackers – with minimal disruption (impact on sleep not included).

While not exactly risk-zero, this approach was the best option because we were sure we had the tooling and the skill to execute an efficient and effective investigation that would enable us to catch up with the attacker and act at scale.

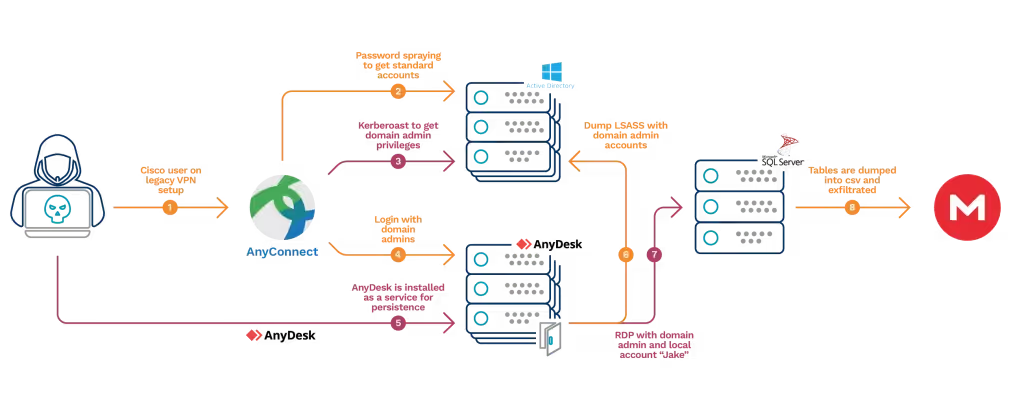

THE ATTACK STORY, WITH A FOCUS ON THE MOST RELEVANT ELEMENTS OF THE KILL CHAIN

Key Takeaways

Once the crisis was averted, we identified several key takeaways and recommendations organizations can take to protectthemselves from ransomware attacks by BlackByte

1. Enforce MFA everywhere applicable, especially for VPN use.

2. Leverage threat intel and IP reputation services to monitor for any successful connections to VPNs from VPS or certain foreign countries.

3. Ensure consistent and correct deployment of EDR solutions, so that—among other things—that alerts can be processed and escalated accordingly.

4. Perform Active Directory assessments to look for privileged escalation paths

5. Enforce a strong password policy, particularly for service accounts, which are a weakness we see in most environments we analyze.

6. Consider blocking file storage platforms and remote access commercial tools such as AnyDesk, TeamViewer, and NGrok, and set up alerts on any connection attempts especially from servers.

7. Make sure you understand the risks introduced by all third-party partners and service providers, and for those who need remote access, consider the following:

- Provide access through a zero trust architecture, removing the need for VPN.

- If VPN is required, ensure your partner connects to a bastion host under your control before they can interact with the LAN network.

- Consider blocking or restricting remote protocols from internal VPN IP ranges, since partners rarely need all network LAN range access.

This list is obviously not exhaustive but implementing the recommendations will go a long way toward helping organizations protect themselves from ransomware attacks like the one we saw in this case.

The good news is that while cyber-attacks and attempted attacks will continue, companies that take efforts to identify threat actors as quickly as possible and always improve their defenses can stop criminals in their tracks and prevent widespread damage.

Click here to download the full case study, including the 7 key recommendations.

.avif)

.webp)